Combining DataFrames with Pandas

In many “real world” situations, the data that we want to use come in multiple files. We often need to combine these files into a single DataFrame to analyze the data. The pandas package provides various methods for combining DataFrames including merge and concat.

To work through the examples below, we first need to load the species and surveys files into pandas DataFrames. Before we start, we will make sure that libraries are currectly installed.

!pip install pandas matplotlib

Requirement already satisfied: pandas in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (0.23.0)

Requirement already satisfied: matplotlib in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (2.2.2)

Requirement already satisfied: python-dateutil>=2.5.0 in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from pandas) (2.7.3)

Requirement already satisfied: pytz>=2011k in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from pandas) (2018.4)

Requirement already satisfied: numpy>=1.9.0 in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from pandas) (1.14.3)

Requirement already satisfied: cycler>=0.10 in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from matplotlib) (0.10.0)

Requirement already satisfied: six>=1.10 in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from matplotlib) (1.11.0)

Requirement already satisfied: pyparsing!=2.0.4,!=2.1.2,!=2.1.6,>=2.0.1 in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from matplotlib) (2.2.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from matplotlib) (1.0.1)

Requirement already satisfied: setuptools in /Users/asha0035/.local/share/virtualenvs/python-workshop-base-LFzz33nP/lib/python3.6/site-packages (from kiwisolver>=1.0.1->matplotlib) (39.2.0)

You are using pip version 10.0.1, however version 18.1 is available.

You should consider upgrading via the 'pip install --upgrade pip' command.

import pandas as pd

surveys_df = pd.read_csv("surveys.csv",

keep_default_na=False, na_values=[""])

surveys_df

| record_id | month | day | year | site_id | species_id | sex | hindfoot_length | weight | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 7 | 16 | 1977 | 2 | NL | M | 32.0 | NaN |

| 1 | 2 | 7 | 16 | 1977 | 3 | NL | M | 33.0 | NaN |

| 2 | 3 | 7 | 16 | 1977 | 2 | DM | F | 37.0 | NaN |

| 3 | 4 | 7 | 16 | 1977 | 7 | DM | M | 36.0 | NaN |

| 4 | 5 | 7 | 16 | 1977 | 3 | DM | M | 35.0 | NaN |

| 5 | 6 | 7 | 16 | 1977 | 1 | PF | M | 14.0 | NaN |

| 6 | 7 | 7 | 16 | 1977 | 2 | PE | F | NaN | NaN |

| 7 | 8 | 7 | 16 | 1977 | 1 | DM | M | 37.0 | NaN |

| 8 | 9 | 7 | 16 | 1977 | 1 | DM | F | 34.0 | NaN |

| 9 | 10 | 7 | 16 | 1977 | 6 | PF | F | 20.0 | NaN |

| 10 | 11 | 7 | 16 | 1977 | 5 | DS | F | 53.0 | NaN |

| 11 | 12 | 7 | 16 | 1977 | 7 | DM | M | 38.0 | NaN |

| 12 | 13 | 7 | 16 | 1977 | 3 | DM | M | 35.0 | NaN |

| 13 | 14 | 7 | 16 | 1977 | 8 | DM | NaN | NaN | NaN |

| 14 | 15 | 7 | 16 | 1977 | 6 | DM | F | 36.0 | NaN |

| 15 | 16 | 7 | 16 | 1977 | 4 | DM | F | 36.0 | NaN |

| 16 | 17 | 7 | 16 | 1977 | 3 | DS | F | 48.0 | NaN |

| 17 | 18 | 7 | 16 | 1977 | 2 | PP | M | 22.0 | NaN |

| 18 | 19 | 7 | 16 | 1977 | 4 | PF | NaN | NaN | NaN |

| 19 | 20 | 7 | 17 | 1977 | 11 | DS | F | 48.0 | NaN |

| 20 | 21 | 7 | 17 | 1977 | 14 | DM | F | 34.0 | NaN |

| 21 | 22 | 7 | 17 | 1977 | 15 | NL | F | 31.0 | NaN |

| 22 | 23 | 7 | 17 | 1977 | 13 | DM | M | 36.0 | NaN |

| 23 | 24 | 7 | 17 | 1977 | 13 | SH | M | 21.0 | NaN |

| 24 | 25 | 7 | 17 | 1977 | 9 | DM | M | 35.0 | NaN |

| 25 | 26 | 7 | 17 | 1977 | 15 | DM | M | 31.0 | NaN |

| 26 | 27 | 7 | 17 | 1977 | 15 | DM | M | 36.0 | NaN |

| 27 | 28 | 7 | 17 | 1977 | 11 | DM | M | 38.0 | NaN |

| 28 | 29 | 7 | 17 | 1977 | 11 | PP | M | NaN | NaN |

| 29 | 30 | 7 | 17 | 1977 | 10 | DS | F | 52.0 | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 35519 | 35520 | 12 | 31 | 2002 | 9 | SF | NaN | 24.0 | 36.0 |

| 35520 | 35521 | 12 | 31 | 2002 | 9 | DM | M | 37.0 | 48.0 |

| 35521 | 35522 | 12 | 31 | 2002 | 9 | DM | F | 35.0 | 45.0 |

| 35522 | 35523 | 12 | 31 | 2002 | 9 | DM | F | 36.0 | 44.0 |

| 35523 | 35524 | 12 | 31 | 2002 | 9 | PB | F | 25.0 | 27.0 |

| 35524 | 35525 | 12 | 31 | 2002 | 9 | OL | M | 21.0 | 26.0 |

| 35525 | 35526 | 12 | 31 | 2002 | 8 | OT | F | 20.0 | 24.0 |

| 35526 | 35527 | 12 | 31 | 2002 | 13 | DO | F | 33.0 | 43.0 |

| 35527 | 35528 | 12 | 31 | 2002 | 13 | US | NaN | NaN | NaN |

| 35528 | 35529 | 12 | 31 | 2002 | 13 | PB | F | 25.0 | 25.0 |

| 35529 | 35530 | 12 | 31 | 2002 | 13 | OT | F | 20.0 | NaN |

| 35530 | 35531 | 12 | 31 | 2002 | 13 | PB | F | 27.0 | NaN |

| 35531 | 35532 | 12 | 31 | 2002 | 14 | DM | F | 34.0 | 43.0 |

| 35532 | 35533 | 12 | 31 | 2002 | 14 | DM | F | 36.0 | 48.0 |

| 35533 | 35534 | 12 | 31 | 2002 | 14 | DM | M | 37.0 | 56.0 |

| 35534 | 35535 | 12 | 31 | 2002 | 14 | DM | M | 37.0 | 53.0 |

| 35535 | 35536 | 12 | 31 | 2002 | 14 | DM | F | 35.0 | 42.0 |

| 35536 | 35537 | 12 | 31 | 2002 | 14 | DM | F | 36.0 | 46.0 |

| 35537 | 35538 | 12 | 31 | 2002 | 15 | PB | F | 26.0 | 31.0 |

| 35538 | 35539 | 12 | 31 | 2002 | 15 | SF | M | 26.0 | 68.0 |

| 35539 | 35540 | 12 | 31 | 2002 | 15 | PB | F | 26.0 | 23.0 |

| 35540 | 35541 | 12 | 31 | 2002 | 15 | PB | F | 24.0 | 31.0 |

| 35541 | 35542 | 12 | 31 | 2002 | 15 | PB | F | 26.0 | 29.0 |

| 35542 | 35543 | 12 | 31 | 2002 | 15 | PB | F | 27.0 | 34.0 |

| 35543 | 35544 | 12 | 31 | 2002 | 15 | US | NaN | NaN | NaN |

| 35544 | 35545 | 12 | 31 | 2002 | 15 | AH | NaN | NaN | NaN |

| 35545 | 35546 | 12 | 31 | 2002 | 15 | AH | NaN | NaN | NaN |

| 35546 | 35547 | 12 | 31 | 2002 | 10 | RM | F | 15.0 | 14.0 |

| 35547 | 35548 | 12 | 31 | 2002 | 7 | DO | M | 36.0 | 51.0 |

| 35548 | 35549 | 12 | 31 | 2002 | 5 | NaN | NaN | NaN | NaN |

35549 rows × 9 columns

Concatenating DataFrames

We can use the concat function in pandas to append either columns or rows from one DataFrame to another. Let’s grab two subsets of our data to see how this works.

# Read in first 10 lines of surveys table

survey_sub = surveys_df.head(10)

# Grab the last 10 rows

survey_sub_last10 = surveys_df.tail(10)

# Reset the index values to the second dataframe appends properly

survey_sub_last10=survey_sub_last10.reset_index(drop=True)

# drop=True option avoids adding new index column with old index values

When we concatenate DataFrames, we need to specify the axis. axis=0 tells pandas to stack the second DataFrame under the first one. It will automatically detect whether the column names are the same and will stack accordingly. axis=1 will stack the columns in the second DataFrame to the RIGHT of the first DataFrame. To stack the data vertically, we need to make sure we have the same columns and associated column format in both datasets. When we stack horizonally, we want to make sure what we are doing makes sense (ie the data are related in some way).

# Stack the DataFrames on top of each other

vertical_stack = pd.concat([survey_sub, survey_sub_last10], axis=0)

# Place the DataFrames side by side

horizontal_stack = pd.concat([survey_sub, survey_sub_last10], axis=1)

Row Index Values and Concat

Have a look at the vertical_stack dataframe? Notice anything unusual? The row indexes for the two data frames survey_sub and survey_sub_last10 have been repeated. We can reindex the new dataframe using the reset_index() method.

Writing Out Data to CSV

We can use the to_csv command to do export a DataFrame in CSV format. Note that the code below will by default save the data into the current working directory. We can save it to a different folder by adding the foldername and a slash to the file vertical_stack.to_csv('foldername/out.csv'). We use the ‘index=False’ so that pandas doesn’t include the index number for each line.

# Write DataFrame to CSV

vertical_stack.to_csv('output/out.csv', index=False)

Check out your working directory to make sure the CSV wrote out properly, and that you can open it! If you want, try to bring it back into Python to make sure it imports properly.

# For kicks read our output back into Python and make sure all looks good

new_output = pd.read_csv('output/out.csv', keep_default_na=False, na_values=[""])

Challenge - Combine Data

In the data folder, there are two survey data files: survey2001.csv and survey2002.csv. Read the data into Python and combine the files to make one new data frame. Create a plot of average plot weight by year grouped by sex. Export your results as a CSV and make sure it reads back into Python properly.

Joining DataFrames

When we concatenated our DataFrames we simply added them to each other - stacking them either vertically or side by side. Another way to combine DataFrames is to use columns in each dataset that contain common values (a common unique id). Combining DataFrames using a common field is called “joining”. The columns containing the common values are called “join key(s)”. Joining DataFrames in this way is often useful when one DataFrame is a “lookup table” containing additional data that we want to include in the other.

NOTE: This process of joining tables is similar to what we do with tables in an SQL database.

For example, the species.csv file that we’ve been working with is a lookup table. This table contains the genus, species and taxa code for 55 species. The species code is unique for each line. These species are identified in our survey data as well using the unique species code. Rather than adding 3 more columns for the genus, species and taxa to each of the 35,549 line Survey data table, we can maintain the shorter table with the species information. When we want to access that information, we can create a query that joins the additional columns of information to the Survey data.

Storing data in this way has many benefits including:

- It ensures consistency in the spelling of species attributes (genus, species and taxa) given each species is only entered once. Imagine the possibilities for spelling errors when entering the genus and species thousands of times!

- It also makes it easy for us to make changes to the species information once without having to find each instance of it in the larger survey data.

- It optimizes the size of our data.

Joining Two DataFrames

To better understand joins, let’s grab the first 10 lines of our data as a subset to work with. We’ll use the .head method to do this. We’ll also read in a subset of the species table.

# Read in first 10 lines of surveys table

survey_sub = surveys_df.head(10)

### Download speciesSubset.csv file from web

import urllib.request

url = 'https://bit.ly/2DfqN6C'

urllib.request.urlretrieve(url, 'speciesSubset.csv')

# Import a small subset of the species data designed for this part of the lesson.

# It is stored in the data folder.

species_sub = pd.read_csv('speciesSubset.csv', keep_default_na=False, na_values=[""])

In this example, species_sub is the lookup table containing genus, species, and taxa names that we want to join with the data in survey_sub to produce a new DataFrame that contains all of the columns from both species_df and survey_df

species_sub.columns

outputIndex(['species_id', 'genus', 'species', 'taxa'], dtype='object')

survey_sub.columns

outputIndex(['record_id', 'month', 'day', 'year', 'site_id', 'species_id', 'sex', 'hindfoot_length', 'weight'], dtype='object')

In our example, the join key is the column containing the two-letter species identifier, which is called species_id.

Now that we know the fields with the common species ID attributes in each DataFrame, we are almost ready to join our data. However, since there are different types of joins, we also need to decide which type of join makes sense for our analysis.

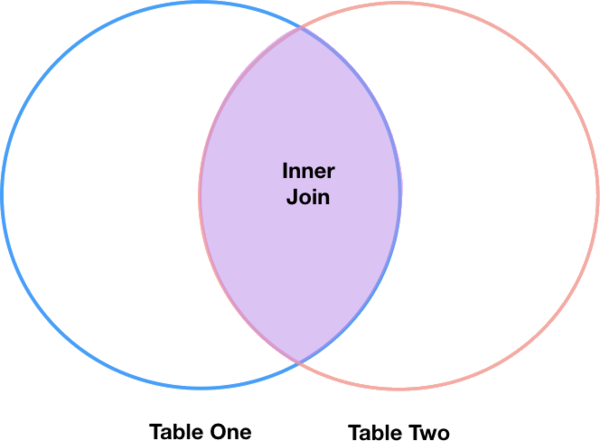

Inner Joins

The most common type of join is called an inner join. An inner join combines two DataFrames based on a join key and returns a new DataFrame that contains only those rows that have matching values in both of the original DataFrames.

Inner joins yield a DataFrame that contains only rows where the value being joins exists in BOTH tables. An example of an inner join, adapted from this page is below:

The pandas function for performing joins is called merge and an Inner join is the default option:

merged_inner = pd.merge(left=survey_sub,right=species_sub, left_on='species_id', right_on='species_id')

# In this case `species_id` is the only column name in both dataframes, so if we skippd `left_on`

# And `right_on` arguments we would still get the same result

# What's the size of the output data?

merged_inner.shape

merged_inner

| record_id | month | day | year | site_id | species_id | sex | hindfoot_length | weight | genus | species | taxa | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 7 | 16 | 1977 | 2 | NL | M | 32.0 | NaN | Neotoma | albigula | Rodent |

| 1 | 2 | 7 | 16 | 1977 | 3 | NL | M | 33.0 | NaN | Neotoma | albigula | Rodent |

| 2 | 3 | 7 | 16 | 1977 | 2 | DM | F | 37.0 | NaN | Dipodomys | merriami | Rodent |

| 3 | 4 | 7 | 16 | 1977 | 7 | DM | M | 36.0 | NaN | Dipodomys | merriami | Rodent |

| 4 | 5 | 7 | 16 | 1977 | 3 | DM | M | 35.0 | NaN | Dipodomys | merriami | Rodent |

| 5 | 8 | 7 | 16 | 1977 | 1 | DM | M | 37.0 | NaN | Dipodomys | merriami | Rodent |

| 6 | 9 | 7 | 16 | 1977 | 1 | DM | F | 34.0 | NaN | Dipodomys | merriami | Rodent |

| 7 | 7 | 7 | 16 | 1977 | 2 | PE | F | NaN | NaN | Peromyscus | eremicus | Rodent |

The result of an inner join of survey_sub and species_sub is a new DataFrame that contains the combined set of columns from survey_sub and species_sub. It only contains rows that have two-letter species codes that are the same in both the survey_sub and species_sub DataFrames. In other words, if a row in survey_sub has a value of species_id that does not appear in the species_id column of species, it will not be included in the DataFrame returned by an inner join. Similarly, if a row in species_sub has a value of species_id that does not appear in the species_id column of survey_sub, that row will not be included in the DataFrame returned by an inner join.

The two DataFrames that we want to join are passed to the merge function using the left and right argument. The left_on='species' argument tells merge to use the species_id column as the join key from survey_sub (the left DataFrame). Similarly , the right_on='species_id' argument tells merge to use the species_id column as the join key from species_sub (the right DataFrame). For inner joins, the order of the left and right arguments does not matter.

The result merged_inner DataFrame contains all of the columns from survey_sub (record id, month, day, etc.) as well as all the columns from species_sub (species_id, genus, species, and taxa).

Notice that merged_inner has fewer rows than survey_sub. This is an indication that there were rows in surveys_df with value(s) for species_id that do not exist as value(s) for species_id in species_df.

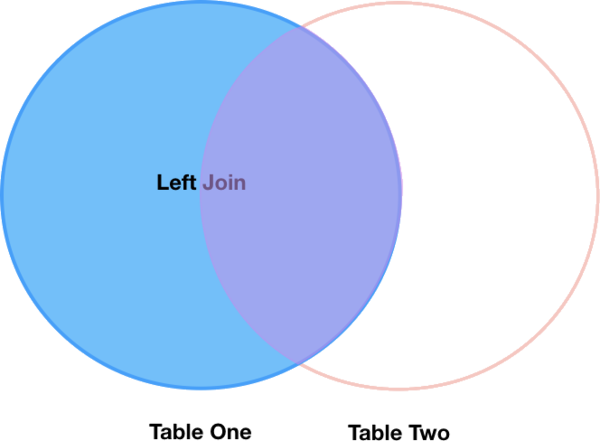

Left Joins

What if we want to add information from species_sub to survey_sub without losing any of the information from survey_sub? In this case, we use a different type of join called a “left outer join”, or a “left join”.

Like an inner join, a left join uses join keys to combine two DataFrames. Unlike an inner join, a left join will return all of the rows from the left DataFrame, even those rows whose join key(s) do not have values in the right DataFrame. Rows in the left DataFrame that are missing values for the join key(s) in the right DataFrame will simply have null (i.e., NaN or None) values for those columns in the resulting joined DataFrame.

Note: a left join will still discard rows from the right DataFrame that do not have values for the join key(s) in the left DataFrame.

A left join is performed in pandas by calling the same merge function used for inner join, but using the how='left' argument:

merged_left = pd.merge(left=survey_sub,right=species_sub, how='left', left_on='species_id', right_on='species_id')

merged_left

| record_id | month | day | year | site_id | species_id | sex | hindfoot_length | weight | genus | species | taxa | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 7 | 16 | 1977 | 2 | NL | M | 32.0 | NaN | Neotoma | albigula | Rodent |

| 1 | 2 | 7 | 16 | 1977 | 3 | NL | M | 33.0 | NaN | Neotoma | albigula | Rodent |

| 2 | 3 | 7 | 16 | 1977 | 2 | DM | F | 37.0 | NaN | Dipodomys | merriami | Rodent |

| 3 | 4 | 7 | 16 | 1977 | 7 | DM | M | 36.0 | NaN | Dipodomys | merriami | Rodent |

| 4 | 5 | 7 | 16 | 1977 | 3 | DM | M | 35.0 | NaN | Dipodomys | merriami | Rodent |

| 5 | 6 | 7 | 16 | 1977 | 1 | PF | M | 14.0 | NaN | NaN | NaN | NaN |

| 6 | 7 | 7 | 16 | 1977 | 2 | PE | F | NaN | NaN | Peromyscus | eremicus | Rodent |

| 7 | 8 | 7 | 16 | 1977 | 1 | DM | M | 37.0 | NaN | Dipodomys | merriami | Rodent |

| 8 | 9 | 7 | 16 | 1977 | 1 | DM | F | 34.0 | NaN | Dipodomys | merriami | Rodent |

| 9 | 10 | 7 | 16 | 1977 | 6 | PF | F | 20.0 | NaN | NaN | NaN | NaN |

The result DataFrame from a left join (merged_left) looks very much like the result DataFrame from an inner join (merged_inner) in terms of the columns it contains. However, unlike merged_inner, merged_left contains the same number of rows as the original survey_sub DataFrame. When we inspect merged_left, we find there are rows where the information that should have come from species_sub (i.e., species_id, genus, and taxa) is missing (they contain NaN values):

merged_left[ pd.isnull(merged_left.genus) ]

| record_id | month | day | year | site_id | species_id | sex | hindfoot_length | weight | genus | species | taxa | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 5 | 6 | 7 | 16 | 1977 | 1 | PF | M | 14.0 | NaN | NaN | NaN | NaN |

| 9 | 10 | 7 | 16 | 1977 | 6 | PF | F | 20.0 | NaN | NaN | NaN | NaN |

These rows are the ones where the value of species_id from survey_sub (in this case, PF) does not occur in species_sub.

Other join types

The pandas merge function supports two other join types:

- Right (outer) join: Invoked by passing

how='right'as an argument. Similar to a left join, except all rows from therightDataFrame are kept, while rows from the left DataFrame without matching join key(s) values are discarded. - Full (outer) join: Invoked by passing

how='outer'as an argument. This join type returns the all pairwise combinations of rows from both DataFrames; i.e., the result DataFrame willNaNwhere data is missing in one of the dataframes. This join type is very rarely used.

Extra Challenge 1

Create a new DataFrame by joining the contents of the surveys.csv and speciesSubset.csv tables. Then calculate and plot the distribution of:

- taxa by plot

- taxa by sex by plot